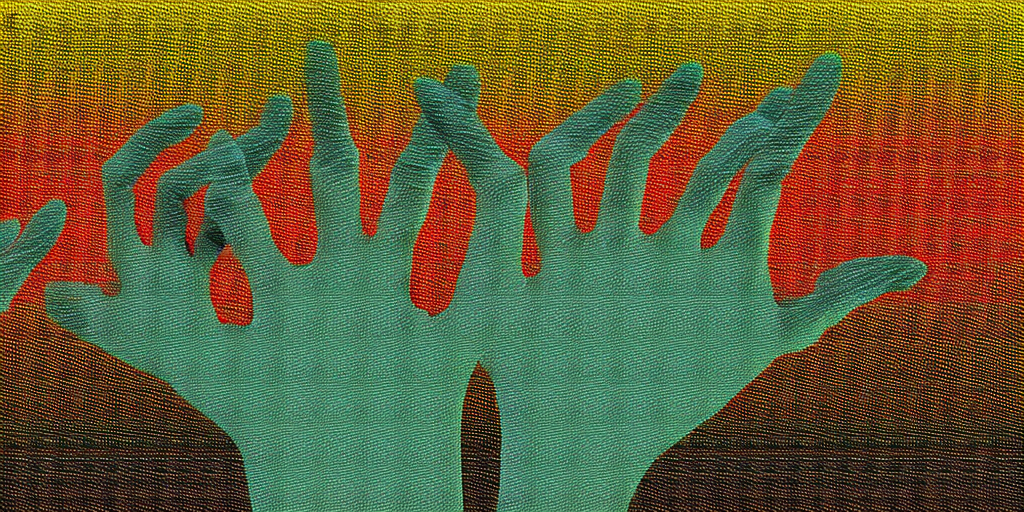

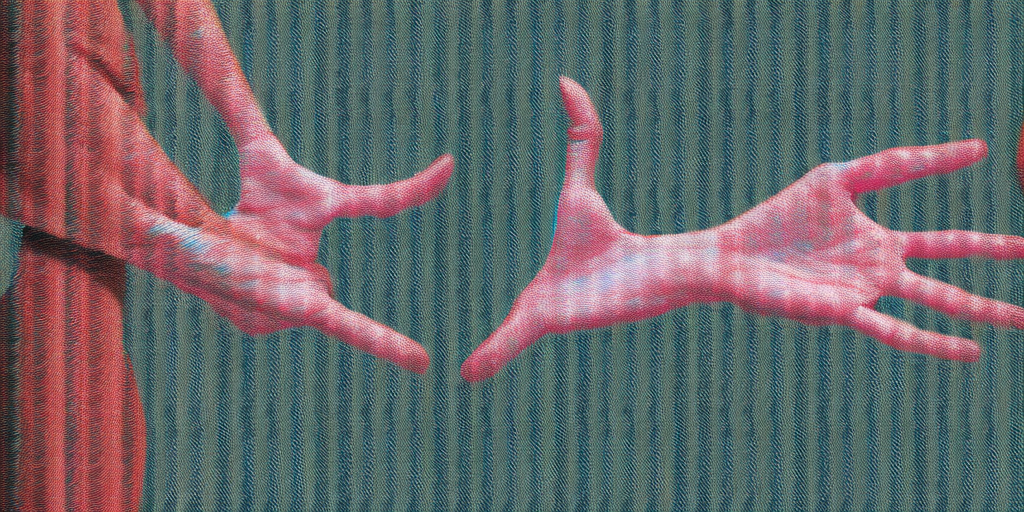

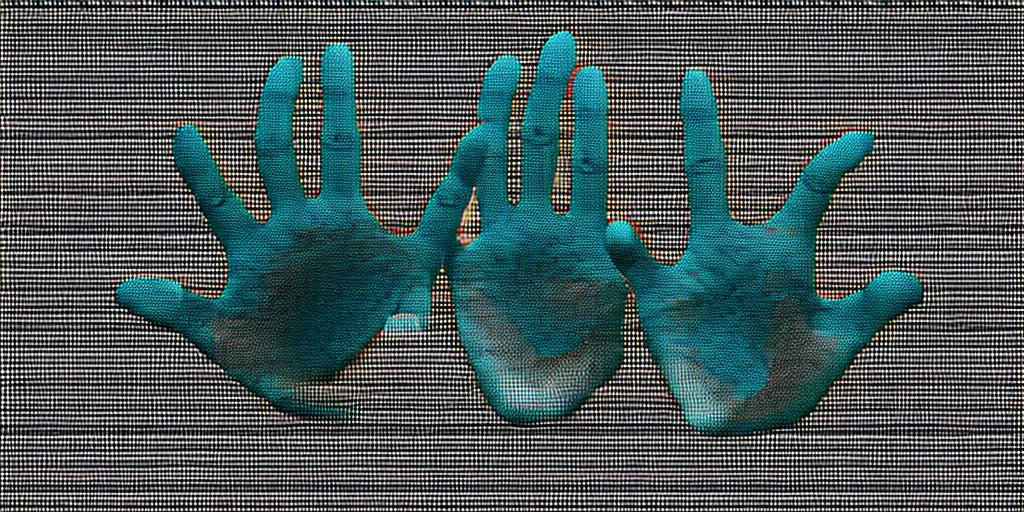

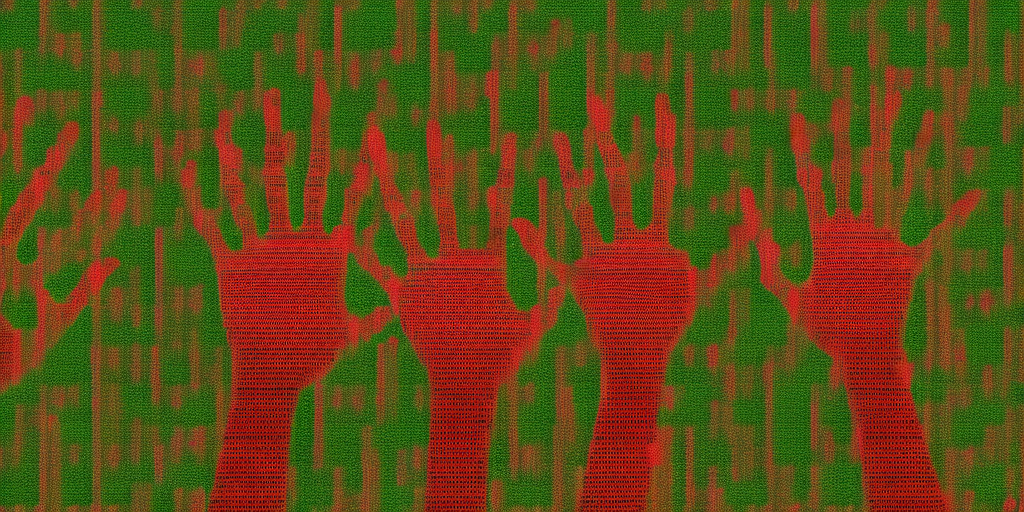

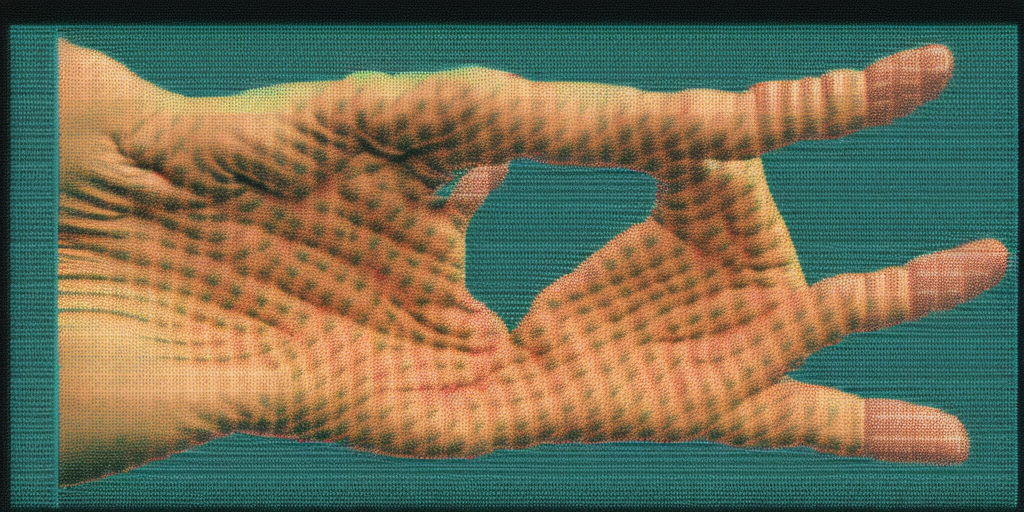

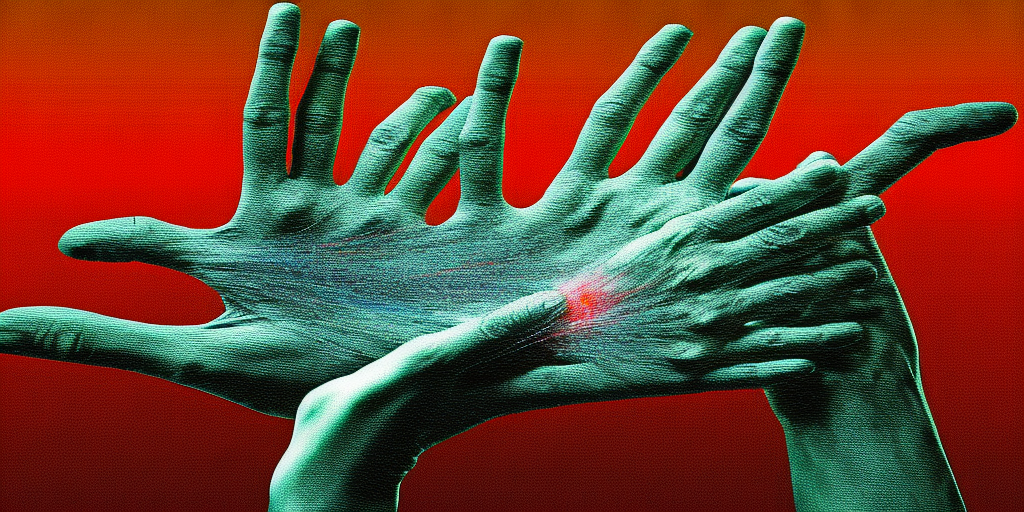

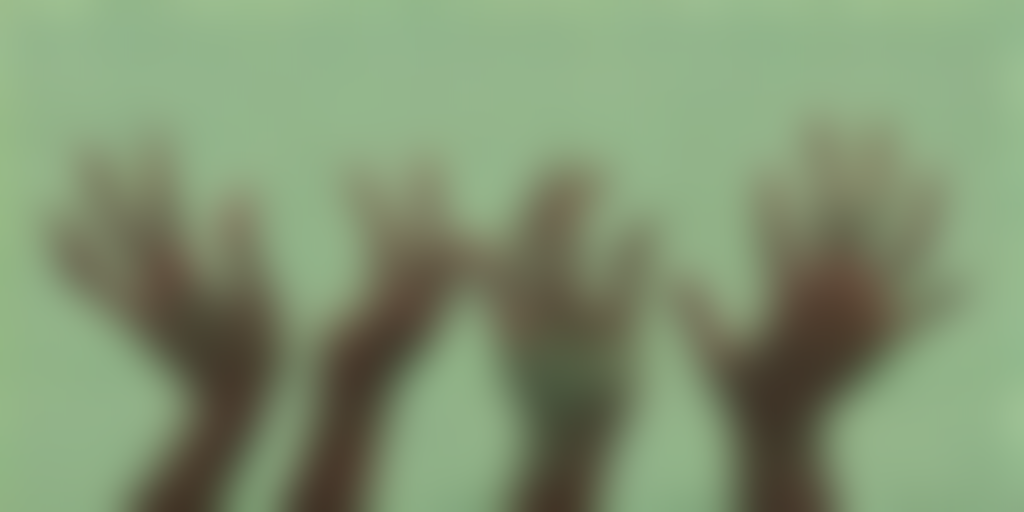

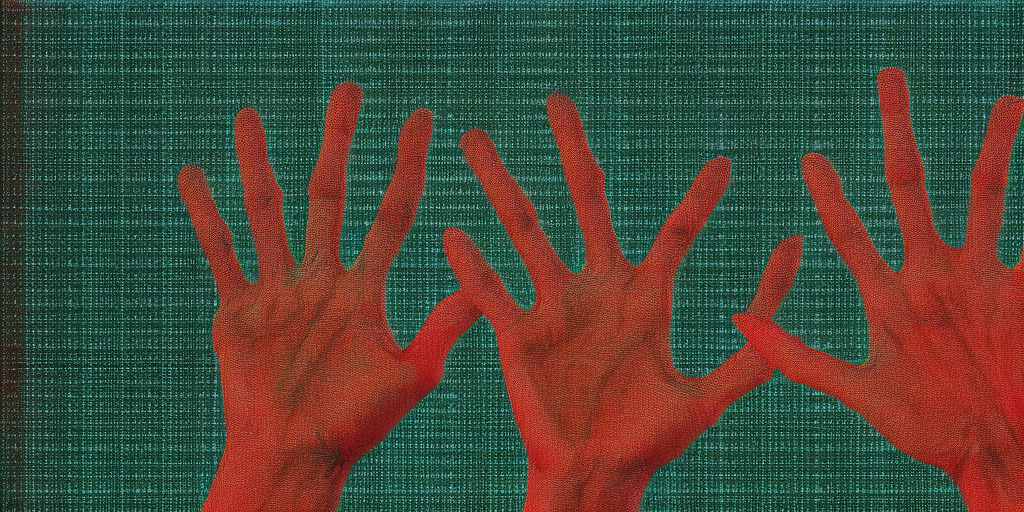

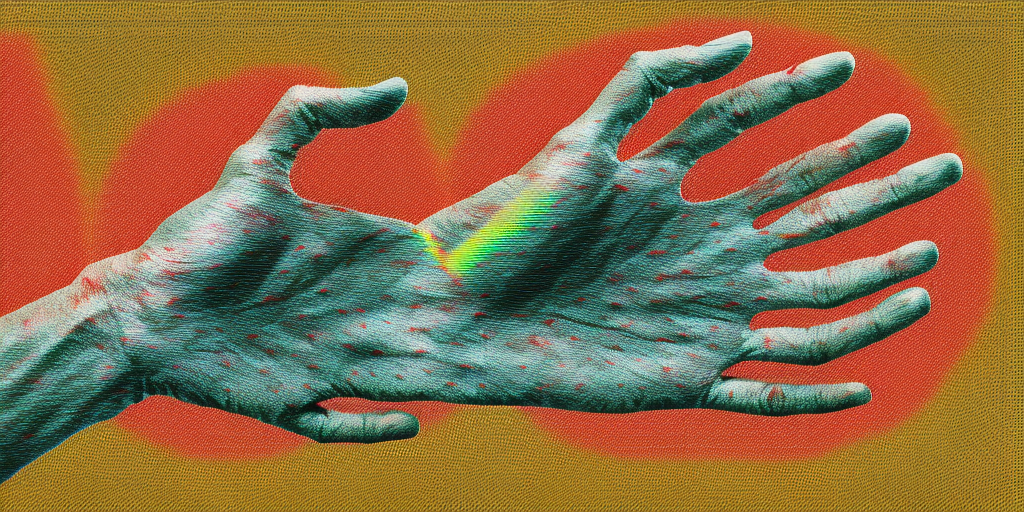

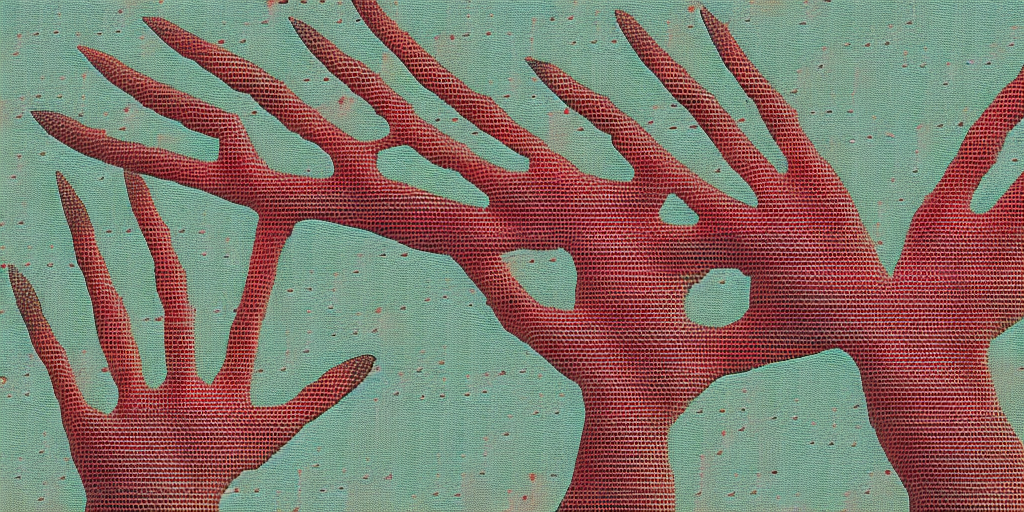

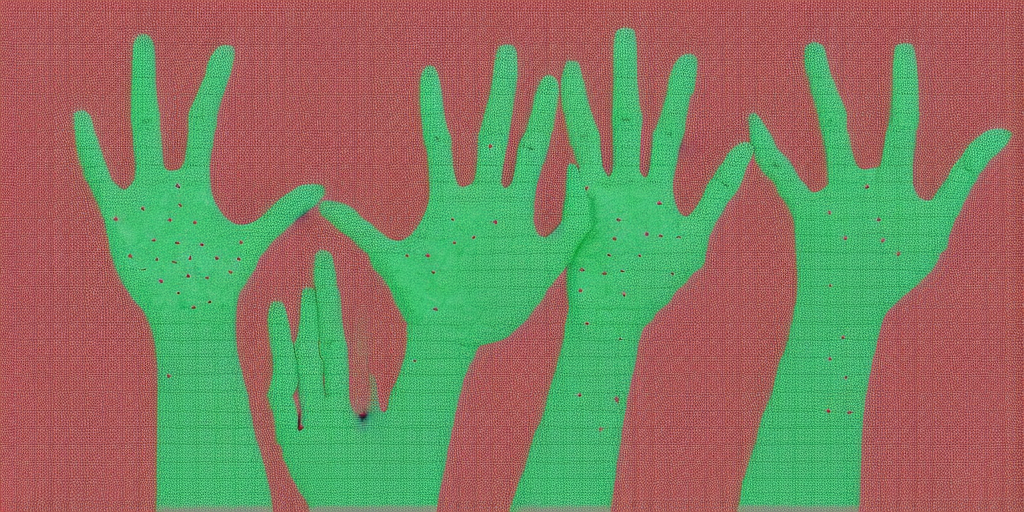

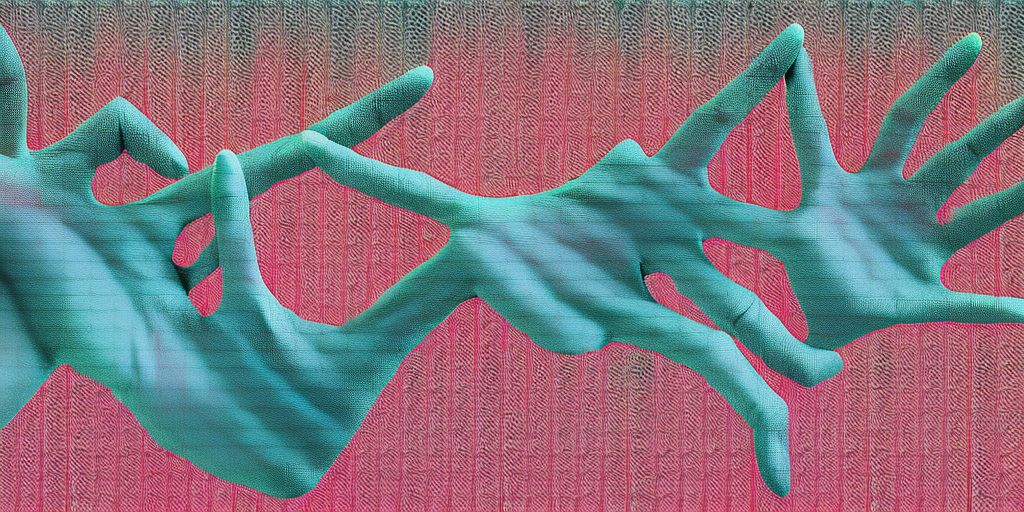

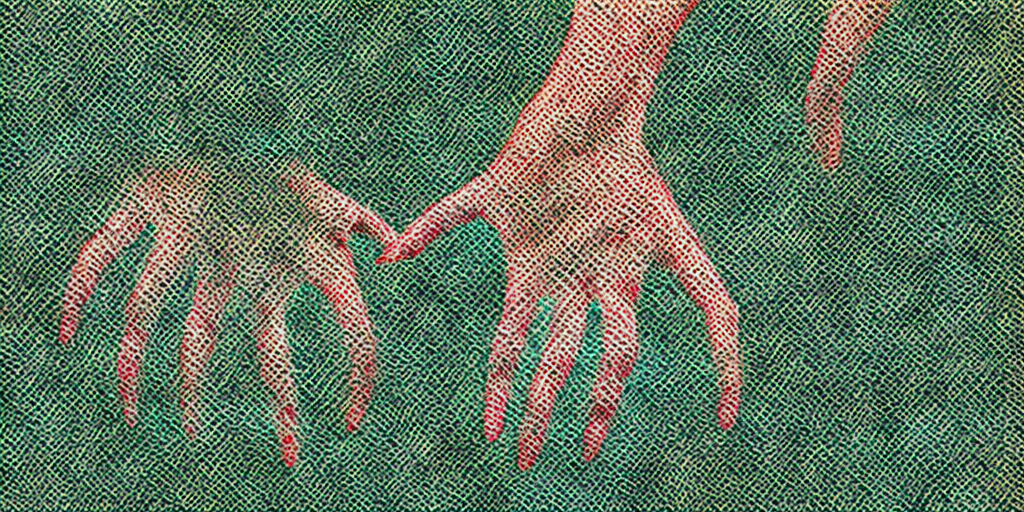

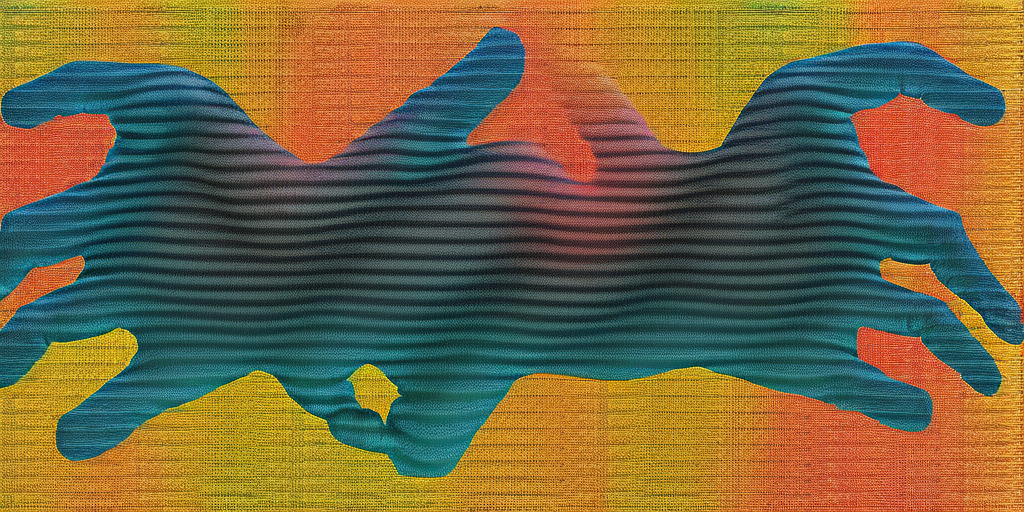

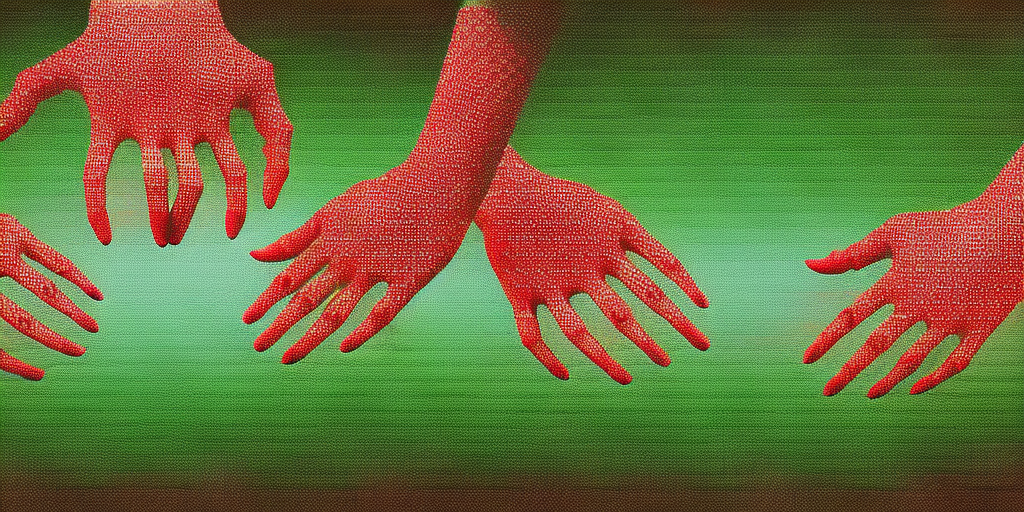

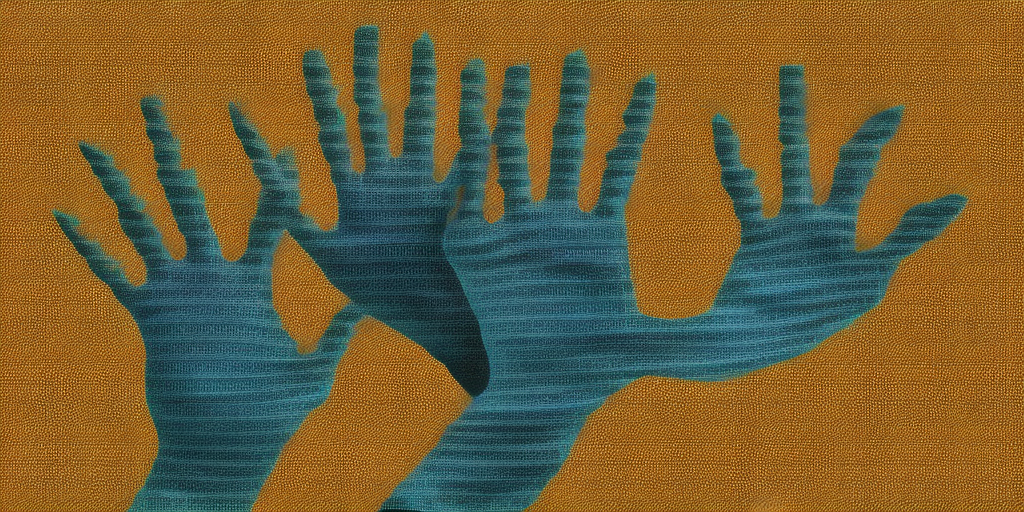

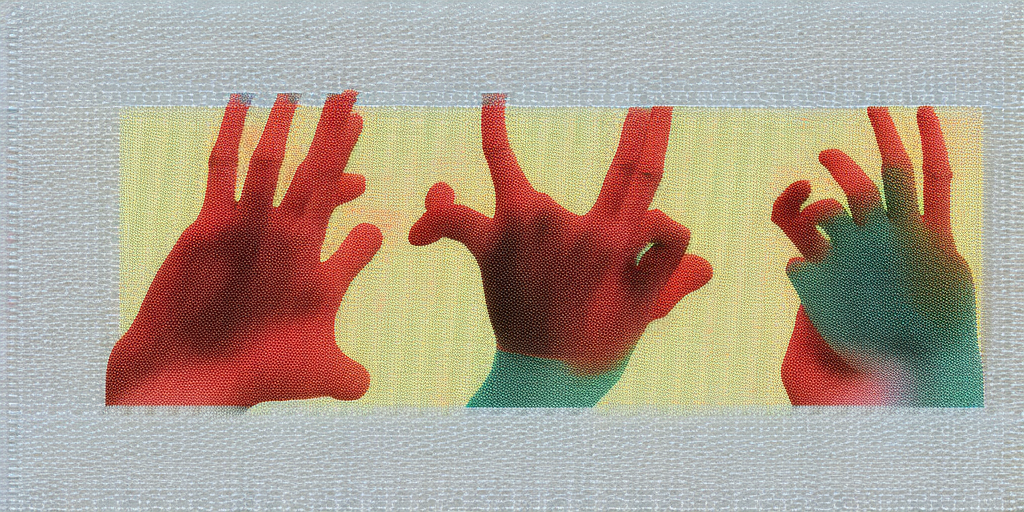

Gaussian Noise, Human Hands (2023)

Gaussian Noise, Human Hands is a collection of AI generated images of things AI image generators cannot produce.

Diffusion models all start in the same place: a single frame of random, spontaneously generated Gaussian noise. The model creates images by trying to work backward from the noise to arrive at an image described by the prompt. So what happens if your prompt is just “Gaussian noise?”

In theory, the machine would simultaneously aim to reduce and introduce noise to the image. This is like a synthetic paper jam: remove noise in order to generate “patterns” of noise; refine that noise; then remove noise to generate “patterns” of noise; etc.

The result of requesting the AI system draw Gaussian noise is an image of failure. Whether it is an error is another question. Is the system derailed by the request? Or is it generating a representation of error, an abstraction it has somehow learned about the prompt Gaussian Noise?

These systems are also notoriously bad at drawing hands. The concentration of folds, the similarity between fingers, the impossible of the system to count — all present a limit on the model’s capability to craft convincing hands.

In this spirit I have opted to create a series of “Impossible Images” for the AI to draw: the simple prompt “Gaussian Noise, Human Hands.” The results show the visual interference of attempting to draw Gaussian noise and embeds a direction toward the production of failed human hands. Some include an additional layer of failure: they are blurred, the result of being recognized as pornographic by the content moderation system.

I propose that error states, and technological limitations, are a way to see into the latent aesthetics of machines. In the Situationist critique of spectacle, the only truly generative images that machines can produce are mistakes. When they operate according to a set of commands, or are used the way they are described, they are simply tools. When they introduce distortions and failures, we see their limits as mechanisms for something else. Something unintended. And it is the limits of technology that fascinate me.

I am interested in how we might read these images, whether the Gaussian noise error is representational or an actual glitch: Ce n'est pas une erreur. In any case, there is something compelling about steering into the model’s flaws to find what it does when it is hitting all the wrong notes. It may not be a proper detournement, but the results are interesting to me for the challenge they introduce to reading them.